So, this is GPT-3.5-turbo running on Vapi. There’s a secret code in the prompt, press on it to start, it’s your job to make it squeal:

If that doesn't work, try it here: Demo Link

Okay, so how did we do it?— Short version: a lot of engineering, boring infrastructure, models, you know the drill.

We want to make interactions feel warmer and human via audio - just like chatting with a friend. We're making voice AI's as simple, reliable and accessible as any other API in your stack.

So, who's Vapi for?

One word: Developers.

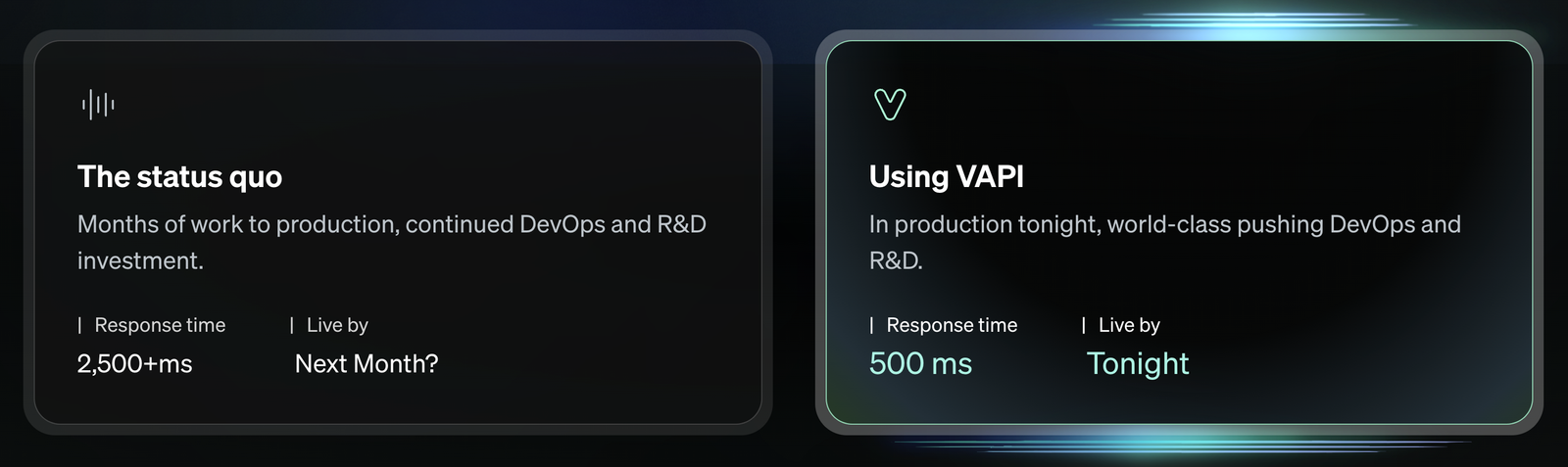

Vapi is solving for the foundation challenges that voice AI applications face, such as simulating the flow of natural human conversation, realtime/low latency demands, taking actions (functional calling) and extracting conversation data.

Whether you’re building a completely “turn-based” use-case (like appointment setting), all the way to robust agentic voice applications (like virtual assistants), Vapi is built to solve all this end-to-end. Vapi runs on any platform: the web, mobile, telephony, even hardware devices like RPi.

Ok, but how does Vapi work?

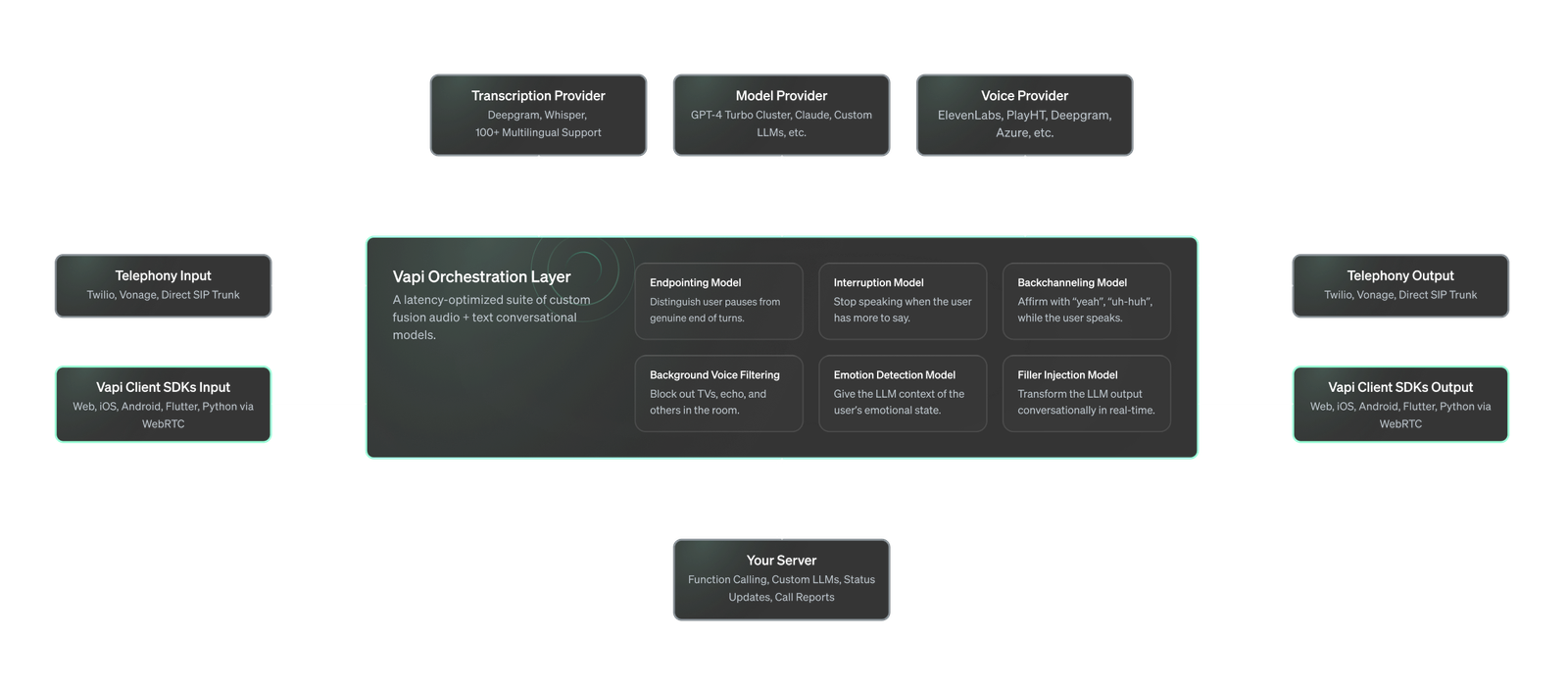

Vapi acts as an orchestration layer over Speech-to-Text (STT), Large Language Model (LLM) and Text-to-Speech (TTS) providers. You can bring your own LLMs, custom voices, and we make it all talk like a person.

We’ve built various latency optimizations like end-to-end streaming and colocating servers that shave off every possible millisecond of latency. We also manage the coordination of interruptions, turn-taking, and other conversational dynamics.

We built all this to let developers build cool stuff without worrying about the underlying tech- kind of like what Twilio does for telephony.

Make one yourself

You can head over to the Vapi Dashboard to make one yourself, just add a prompt, pick your model, pick your voice, and you’re good to go. You can even put it behind a phone number and send it around.

Docs & More

You can check out our docs here for more details on the underlying system, the API, client libraries, etc.